Johnny Tsou's github page

NTUT EE dept, CE team Master Student

Model optimization - yolov3 Pruning

The main purpose of this work is to optimize a custom yolov3 model in order to run the detection task in real-time while deploying the model on the edge device. The optimization work is referencing to a network training scheme called [1]network slimming, containing three following main steps with providing a yolov3 model which trained in a custom road damage detection dataset. The pruning stradegy includes pruning on both channel and layer for reaching higher model compression ratio and faster inference time.

1. Sparse Training

In order to find out which neuron in the model is insiginificant for the output and prune it out, we need to perform sparse training for the model. This step will induce large difference between each neuron's (channels) weight by doing L1 regulization on the scaling factor (gamma) in batch normalization (BN) layers.

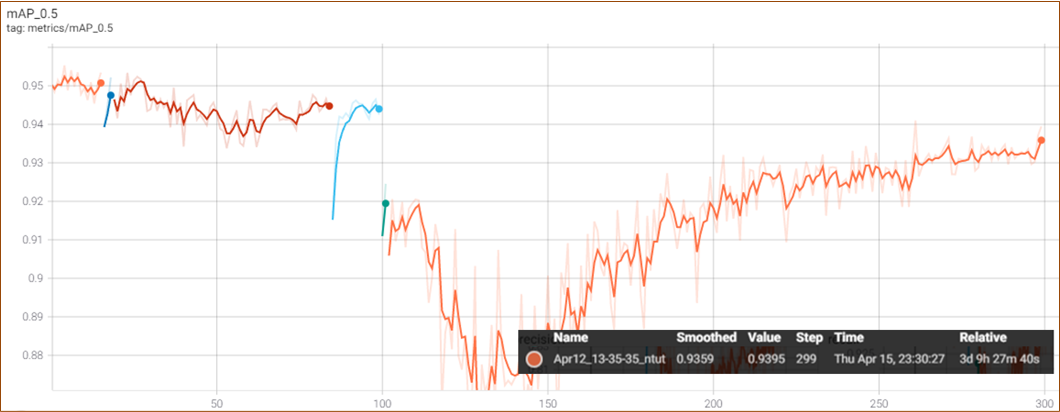

After 300 epochs of sparse training, the distribution of weights has approached to 0. See Fig. 1 Which means the model is ready for pruning. Although the weights were extremly compress to 0 (Fig. 2), the accuracy of the model still maintained in good level (mAP: 93.9%).

Fig. 1 mAP graph of 300 epochs Sparse training.

Fig. 1 mAP graph of 300 epochs Sparse training.

Fig. 2 BN gamma weights distribution diagram.

Fig. 2 BN gamma weights distribution diagram.

2. Model Pruning

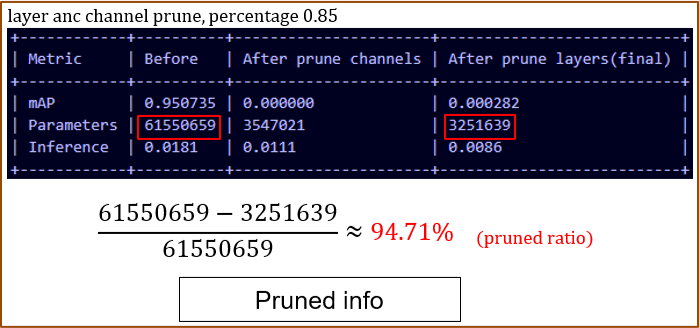

The insiginificant neurons has been find out and ready to be pruned after the sparse training. Here I choose to do the channel and layer pruning on the model to get a high pruning rate. After trying several pruning profile, the final config was set to 85% on channel pruning rate and cut off 8 shortcuts. 2 CBLs will be cut off along with each shortcut, so cut off 8 shortcuts is removing 24 layers in total. The detail prund info is in Fig. 3. The pruning rate on patameters reach 94.7%. The mAP dorp to 0 after pruning, so we need step 3 to finetune the pruned model for fixing mAP.

Fig. 3 Model pruned info

Fig. 3 Model pruned info

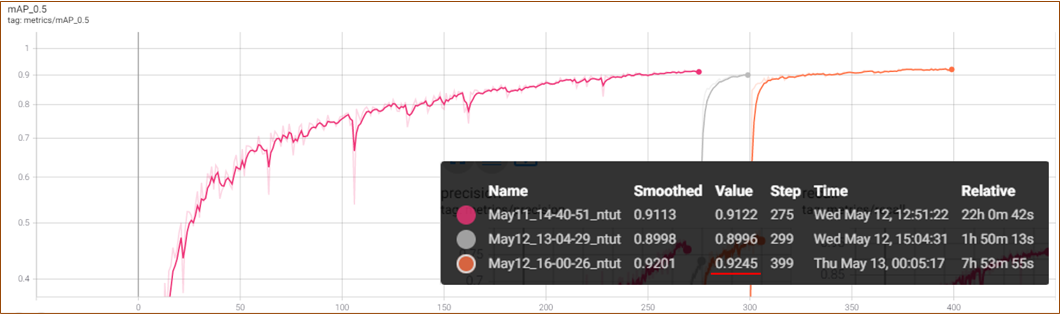

3. Model Finetuning

In order to fix the mAP performance after pruning the model, we need finetune the model. After training with knowledge distillation for 500 epochs, the mAP back to 92.4% (Fig. 4). The teacher network was set to original unsparsed network.

Summary

| model | input size | parameters | mAP | inference time |

|---|---|---|---|---|

| yolov3 | 608x608 | 61550659 | 93.5% | 11.2ms |

| yolov3-pruned | 608x608 | 3251639 (-94.71%) | 92.4% (-1.1%) | 1.8ms (-83.9%) |

Tested on Nvidia GeForce RTX 2080Ti x1

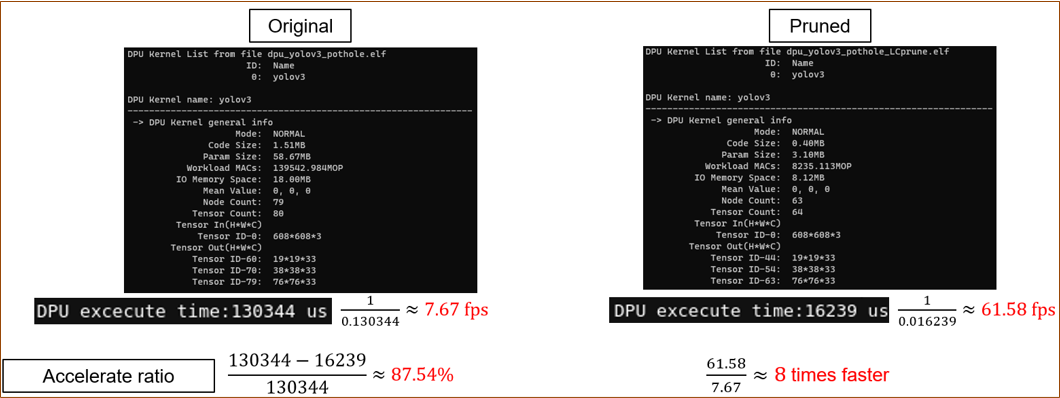

Also, I've tried to deploy the model on FPGA(Xilinx ZCU104) with DPU. There is a siginificant reduction on the excecution time. (Fig. 5)

Fig. 5 Performance info of detection model deployed on DPU. (FPGA evaluation board: Xilinx ZCU104)

Fig. 5 Performance info of detection model deployed on DPU. (FPGA evaluation board: Xilinx ZCU104)

Reference

[1] Z. Liu, J. Li, Z. Shen, G. Huang, S. Yan, C. Zhang. Learning Efficient Convolutional Networks through Network Slimming. In ICCV, 2017.